The Vingean Singularity

Created | Updated Sep 21, 2012

There are scientists who say that technological progress will accelerate indefinitely, causing a mind-boggling change in the basic principles of what we humans affectionately call 'home'. It is a well-known fact among Singularitarians that this event, known as the Singularity1, will occur sometime before the year 2100. Some attribute this hypothetical event to Vernor Vinge and claim that it is first mentioned in his sci-fi novel True Names and Other Dangers as one of the author's answers to a very interesting question; what would happen if the human race created an artificial intelligence which is more intelligent than its creators? A character in the book finds himself 'precipitated over an abyss' when trying to predict future technology by extrapolating current trends. Vinge feels that when humans create an intelligence greater than their own, 'human history will have reached a kind of singularity'.

Although the Singularity is sometimes referred to as the brainchild of Vinge, it was briefly mentioned in the 1950s by John von Neumann, as Raymond Kurzweil points out in his excellent book précis The Singularity is Near. John von Neumann observed that...

... the ever accelerating progress of technology... gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.

A Brief Explanation

Imagine a curve that represents the technological progress of human beings throughout history. Most people would agree that we have come a long way in only a century; we've invented new technology, learned new things and developed as a race. Thus the curve slopes upward.

We can examine this curve and extrapolate to create a function that describes the level of progress for any given point in time. Many have done this, including Ray Kurzweil, the renowned inventor of synthesisers and text-to-speech machines and also author of The Age of Intelligent Machines, a book that won the Association of American Publishers' Award for the Most Outstanding Computer Science Book of 1990. Kurzweil has shown that the doubling period of the speed of computers is diminishing, ie it used to take us three years to double the speed and memory capacity of computers in the beginning of the 20th Century, and now the same kind of progress is achieved in only one year. He claims that these trends will continue, and that computers will be able to emulate human brains in the year 2020.

When and if computers become intelligent, computers themselves could construct new computers, causing a massive acceleration in technological progress. One way to visualise this acceleration is to consider the following rather trivial function.

f(t) = -1 / t

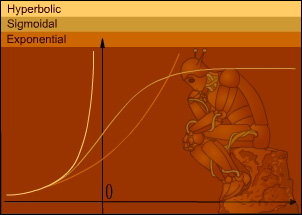

Readers with working knowledge of mathematics can see that this function gives us very large values for very small negative values of t. As t approaches zero (from the negative side), the value of the function approaches infinity. This is called hyperbolic growth. A hyperbolic function grows much faster than an exponential function, as the reader can easily see in the following table. Note that t takes on negative values; think of it as a countdown to the Singularity.

Exponential versus Hyperbolic

| Time (t) | Exponential (2t+1) | Hyperbolic (-1/t) |

|---|---|---|

| -2.0 | 0.5 | 0.5 |

| -1.5 | 0.7 | 0.67 |

| -1.0 | 1 | 1 |

| -0.5 | 1.41 | 2 |

| -0.01 | 1.986 | 100 |

| -0.000001 | 2 (approx.) | one million |

| 0 | 2 | undefined |

Given only a few points from a curve, there are several ways to extrapolate. In addition to comparing the growth of different functions, the graph below shows that the seemingly hyperbolic growth could in fact be exponential or even sigmoidal. This is discussed below.

Exponential functions have well-defined outputs for all inputs, as opposed to our hyperbolic function f(t). At the t-value of zero, the function is undefined (since division by zero is undefined). This is a mathematical singularity, corresponding to the Singularity. If we extend the analogy a bit, negative values of t represent the time before the Singularity.

The term 'singularity' can be interpreted either literally or figuratively. If we interpret the Singularity literally, it would mean that we'll make nearly infinite progress (whatever that means) in finite time, which is a rather absurd notion that is impossible to fully grasp. According to Vinge, 'new models must be applied' for humans to have the slightest chance of understanding what's going on.

The figurative interpretation of the concept of infinite progress is not only more believable but also much easier to visualise. It is presented in the book Can We Avoid a Third World War around 2010? by Peter Peeters. The author has extrapolated and found the hyperbolic growth mentioned above, but uses this method strictly to attract attention to possible crisis points. He claims that this method can predict points in time where there's a change so fundamental as to be called 'the end of the world as we know it' by pessimists, and has shown that his claims have some validity by showing that some historical crises, such as the First World War, coincide with his analytical crisis points. It should be noted, however, that the Second World War did not fit the model.

Thus, the conservative view of the Singularity is that it is a point of change or crisis. Another way of putting it is that the trends are all there, but the significance and results of these trends are still being discussed.

Paths to the Singularity

The Singularity can be reached in a number of different ways. Here are some examples.

Neurohacking - In this scenario, humans learn how to improve the brain either purely biologically or by introducing some kind of technology in the human brain - ie cybernetics. This ties in a bit with transhumanism, and the resulting positive feedback loop is what gives rise to the Singularity.

Self-enhancing computers - Most people who know a bit about computers know about Moore's Law. However, what would happen if we create an artificial intelligence (AI) that is just as smart as us and let it construct new computers? It's like a textbook example of positive feedback loops. At first, there would be no difference; processing power would double in another 18 months. After that, since the AI is working faster, processing power would double in nine months2, 4.5 months, and so on, and hey presto! We've reached the Singularity.

Uploading - Instead of creating intelligent software the hard way by writing all the code, why not just tap into the software already in our own heads? Some claim that it is theoretically possible to emulate a human brain (using so called 'whole brain emulation'), and that it will be possible to upload a person's mind into a computer. That would create a sentient computer program that can enhance its own code, causing the positive feedback loop that in turn causes the Singularity.

Nanotechnology - It is already possible to construct extremely small engines at the molecular level. This is called nanotechnology, and is often closely associated with so called 'nanobots', hypothetical robots that measure only a few nanometers across. Theoretically, nanotech could be used for neurohacking, to create an intelligent computer, or for whole brain emulation. A good source of information for the aspiring nanotechie is the Foresight Institute, a 'non-profit educational organisation formed to help prepare society for anticipated advanced technologies'. Another important nanotech website is that of Zyvex, the first molecular nanotechnology company.

Singularitarians

Although most of this sounds like pure science fiction, it is like most sci-fi slowly becoming science. Some people claim that it is perfectly logical to expect the Singularity to occur no later than the year 2010, if we work hard enough. These are of course Singularitarians. Note that the Singularitarians are not really an organised community; it's more a term for people that are actively working towards the Singularity. Examples of attempts at making an organised effort to reach the Singularity follow.

Among the people working towards the Singularity are the Singularity Institute, who feel that we don't have much time before research on nanotechnology is completed. They often cite the 'grey goo problem'3, and claim that to avoid a global catastrophe, we need to reach the Singularity caused by a self-enhancing AI4.

The person who seems to be the most motivated agent of the Singularity is of course also a founder of the Singularity Institute. His name is Eliezer Yudkowsky, and his website, The Low Beyond, is a vast source of information pertaining to the Singularity.

John Smart, a private teacher from Los Angeles, has a relatively conservative attitude towards the Singularity. He is making people aware of the Singularity through his website Singularity Watch, a source of carefully developed writing on the subject at an introductory and multidisciplinary level. According to the website, a 'singularity watcher' is 'neither absolutely convinced - nor uncritically happy - that the singularity is going to happen, but they do believe this issue deserves serious scientific investigation'. Due to his more conservative approach, Smart doesn't capitalise 'Singularity'.

The proposed society called the Singularity Club has an attitude towards the Singularity that is diametrically opposite Smart's. It is a more radical and controversial movement that claims that 'one must have the desire to not only survive the Singularity, but to ride its powerful wave right to Ascension (godhood). To, in effect, become the Singularity.'

Conclusion

It is important to stress that these are all speculations, conjectures and theories. Like all theories, the Singularity is subject to debate. Many point to the laws of physics; an infinitely fast computer-making computer would probably generate so much heat that it would incinerate the planet. Others point out that positive feedback can only take us so far, and that growth grinds to a halt after a certain threshold, much more resembling a sigmoidal curve than a hyperbolic curve. An example of growth like this is when a microphone is held up to a loudspeaker playing back the microphone signal, the loudspeaker becomes louder and louder, but not indefinitely. There are several built-in limitations to the system.

There is no real evidence that the Singularity will occur, simply because it hasn't occurred yet. Just like it's impossible to make a 100% accurate weather forecast, it's impossible to know if a certain event will ever come to pass. The reason that it does seem as if we might reach the Singularity is that there are many ways to reach it and many current trends point in that direction.

Many information theorists, evolution theorists and computer scientists come to the same conclusion; we will reach the Singularity, whatever it may be, in one way or another.